Running conferences on Matrix: A post-mortem of Ansible Contributor Summit

Last week, we (the Ansible Community Team) hosted one of our regular Contributor Summits, but this one was different - we used Matrix as the backbone of the audience participation. In this post I’ll talk about why, how it went, and try to extrapolate to some more general thoughts about virtual conferences.

The Grand Design

Lets talk a a bit about how we set things up. We had a few requirements:

- We weren’t sure exactly how many attendees we’d have. Being attached to AnsibleFest meant that it was possible to have quite a surge

- We need recordings for later viewing, as we have a global community

- We planned a hackathon, so we needed breakout rooms, pair programming, etc.

- We’d like to use as much FOSS as we can (although we’re fairly stuck with YouTube at this point as we already have a channel)

Traditionally, we have used BlueJeans or Google Meet for our virtual events (standard video meeting tools), and used BlueJeans Primetime when we expected a large attendance (which uses a presenter/spectator format). This has some consequences:

- We lose people over a barrier to entry

- Some don’t see the announcements

- Of those that do, some won’t sign up

- Of those that do, some won’t join the meeting

- Chat history is lost at the end of the call

- Chat is secondary and often hidden by default

- Manual process to upload recordings

- Not FOSS

We wanted to do better, and our adoption of Matrix during the summer gave us a chance to try a different way. Because of needs (1) & (2) we can’t use the Matrix-provided in-room video, but by streaming direct to YouTube and then giving the video URL to the attendees, we get a presenter/spectator model (just like BlueJeans Primetime, which our audience was OK with last year).

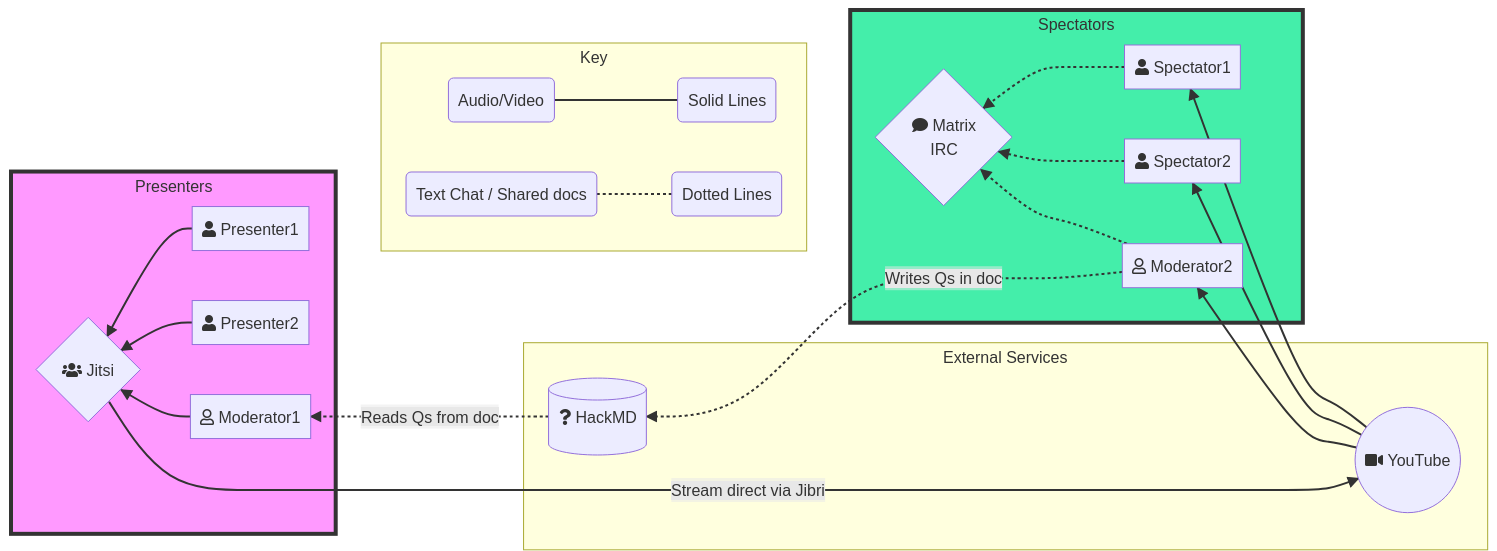

This was the final architecture we came up with:

Arrows indicate content flow - that is, the presenters do their talk on Jitsi, which sends the audio/video to YouTube, spectators watch the YouTube stream and discuss in chat, and moderators in chat feed audience Q&A back to Jitsi.

Overall, we were pretty happy with this. I got the room events (for all the rooms related to the conference) from the Matrix API, and found that:

- We had 103 unique IDs say something or post a reaction during the event

- 29 had a libera.chat domain (indicating an IRC user) - that’s ~28%

- There were 2680 messages and 530 reactions

- Reactions thus making up ~17% of the total

- Looking at how many users did each thing

- 94 IDs posted at least one

m.room.message - 57 IDs posted at least one

m.reaction - 61 IDs used either a reaction, an edit, or a reply at least once.

- 94 IDs posted at least one

For me, that’s big news - in a community that only formally adopted Matrix a few months ago, over half the participants used features only available to Matrix. I’m going to speak more about why that matters below, but first I want to discuss what did & didn’t work well. We’ll come back to why in a moment.

Jagged Little Pill

This was an experiment, and all experiments have sharp edges. However, the main thing I want to note here is that the sharp edges mainly affected us, the moderators, and to some extent the presenters. The viewers I have spoken to so far seemed satisfied with the event.

Problem 1 - Handling Audience Q&A

This was clunky to handle. With no dedicated Q&A tooling in Matrix, it was always going to be difficult to work out how to get questions in front of the speakers.

We messed about with several options, but eventually settled on the simplest. A chat moderator would interact with the audience, and would copy questions to a shared doc (we use HackMD), and would mark copied questions with an :eyes: reaction so the audience knew it was tracked. The Jitsi moderator would then read out the questions, so that speakers didn’t have to worry about finding them.

This worked, but it was not a great experience for the moderators, it generated a lot of extra overhead. This is probably the highest priority thing to improve, for me.

Problem 2 - Jitsi Audio Issues

We selected Jitsi because it can natively handle the stream to YouTube (rather than requiring a moderator to handle the stream via OBS), which meant we didn’t need to have one person responsible for a whole 8 hour stream.

We ran our own Jitsi setup rather than use meet.jit.si because the latter is

frequently overloaded/unstable - it was a lower risk to build our own, even on

an untested provider. We used Unispace because they

(unlike many others) have Jibri available as part of the cluster setup. This

gave us a turn-key YouTube-enabled meeting room at a decent price, I was

impressed!

Jitsi wasn’t perfect though - there were times when we lost audio from/to specific people in the meeting (this doesn’t seem to be just us, there’s an open GitHub issue about the problem). Mostly it wasn’t a big deal, but it did cause a major problem when we lost the audio to & from one presenter, making it very hard to get them to stop their talk while we fixed the issue.

I’m not too worried about this. Audio/video issues can strike any online event, and given we had only one major problem with ~25 speakers… that’s OK for a first try.

So Far So Good

The rest was good. Really good!

Intermittent audio issues aside, speakers seemed content - Jitsi is really just like any other video meeting, and we’re all far too familiar with those by now. They didn’t have to worry about the streaming, Q&A, etc, just turn up and speak. I think we can improve our Jitsi config, upgrade the version, enable the pre-join screen, etc, but overall I think we did a good job of making it easy and fun to present at our event.

Viewers also seemed happy. In particular, I received a lot of posiive feedback like:

- how lively the chat felt

- how it felt like a proper hallway track

- how the use of YouTube meant it worked seamless on mobile devices;

- how we weren’t requiring yet-another-login to something they’ll never use again;

- having the history & persistence of the chat room

All excellent points, and I’m particularly glad to hear that it felt more “alive” and more “hallway-ish”, because that is the very thing missing from most online events, and what I was trying to fix.

Images and Words

So now the fun part - here’s why I think this worked so well. Brace yourself for some amateur sociology :)

When I think about what we did this time vs. what we did before, the things that strike me are:

- That everyone’s role is clear - no quandry about whether you’re supposed to ask your question on video or in chat, or having to wait for an appropriate pause. Type away! Moderators will pick it up.

- Chat is front-and-center, the main focus. Not relegated to the sidebar, not an afterthought, and not hidden by default.

The chat is Matrix is rich - replies, edits, reactions, even typing notifications make for a much more immersive experience than the normal chat you would find in a virtual meeting. That’s not a criticism of those tools - they’re built for meetings after all. But it’s not sufficient for an event.

What we miss in this current pandemic-crippled world is the face-to-face-ness, the chat, the exchange. When I think of a group of 6 people around a table, there’s probably at least 10 conversations going on - we tune in and out of things depending on the topic and the speaker. Hidden in that is a lot of non-verbal stuff - nodding, hand gestures, facial expressions, etc, that even the best video tools cannot capture.

I think a rich text interface helps a lot with replacing that non-verbal part:

- Reactions help replace the “nod”, the “thumbs up”, the “shocked face” - without interrupting the flow of the text

- Replies help us to keep context as we tune in-and-out of different parts of the chat

- Typing notifications allow us to “see” the other person gathering their thoughts for the next statement, instead of jumping in and “interrupting”

This is armchair psychology, of course, but everyone I spoke to had a similar feeling of being more connected during in this event. That held true in the break - our conference was Tue & Fri, with a larger Ansible event in the middle, and yet folks were still hopping into chat to talk. We’ve people who are now, a week later, still present and helping out. The community has grown, at least partly because of the connections they were able to make during the Summit.

I also promised to return to my data - and I think it supports my argument. I’m asserting that the event felt good because we had rich tools to work with, and the data suggests attendees agree. With such a big take-up in features that we’ve only recently gained access to, I would conclude that they’re important; that there is a need for reactions, replies, and so on - even if they seem trivial to some.

Coda

I’m really happy with the feedback so far. We’re definitely in an “iterate and improve” place, rather than a “rip it out and try something else” place. Q&A is the big piece for me. I don’t want to do threads because I think questions are part of the flow of the discussion (and I have “opinions” on threads in a synchronous medium anyway); pinned messages could work so long as the moderators can see the pins, or perhaps a variant of the TWIM-style bot could be used to gather Qs by reaction. Something to explore, anyway.

By any measure, this has been a positive trial, and I look forward to our next even and putting some of this into practice!

(By the way, congrats if you got the heading theme - they’re all album titles. In order - Edenbridge, Alanis Morisette, Bryan Adams, Dream Theater, and Led Zepplin :P)